Team

Team:

Lehrstuhlinhaber

Prof. Dr. Stefan Schneegass

- Raum:

- S-E 404

- Telefon:

- +49 201 18-34251

- E-Mail:

- stefan.schneegass (at) uni-due.de

- Sprechstunde:

- nach Vereinbarung

- Autorenprofile:

- ORCID

- Google Scholar

- Adresse:

- Universität Duisburg-Essen

Institut für Informatik und Wirtschaftsinformatik (ICB)

paluno - The Ruhr Institute for Software Technology

Mensch-Computer Interaktion

Schützenbahn 70

45127 Essen

Zur Person:

Stefan Schneegass ist Professor für Informatik an der Universität Duisburg-Essen. Er erhielt seinen Doktortitel von der Universität Stuttgart in 2016. Seit Beginn seiner Promotion in 2012 arbeitete er an verschiedenen nationalen und internationalen Forschungsprojekten und publizierte in den wichtigsten Konferenzen und Journalen im Bereich der Mensch-Computer Interaktion. Stefan hat einen Bachelor und Masterabschluss von der Universität Duisburg-Essen. Während des Studiums arbeitete er als studentische Hilfskraft am Lehrstuhl für Pervasive Computing und User Interface Engineering und am DFKI Saarbrücken. Momentan forscht er im Bereich der Mensch-Computer Interaktion insbesondere im Bereich der mobilen, tragbaren und ubiquitären Interaktion.

Forschungsgebiete:

Eine Übersicht über meine Forschung können Sie auf meiner Google Scholar und DBLP Seite finden.

Publikationen:

- Keppel, Jonas; Strauss, Marvin; Haliburton, Luke; Weingärtner, Henrike; Dominiak, Julia; Faltaous, Sarah; Gruenefeld, Uwe; Mayer, Sven; Woźniak, Paweł W.; Schneegass, Stefan: Situated Artifacts Amplify Engagement in Physical Activity. In: Proceedings of the 2025 ACM Designing Interactive Systems Conference, Jg.2025 (2025). doi:10.1145/3715336.3735690Kurzfassung Details VolltextBIB Download

In the context of rising sedentary lifestyles, this paper investigates the efficacy of “Situated Artifacts” in promoting physical activity. We designed two artifacts that display users’ physical activity data within their homes – one physical and one digital. We conducted a 9-week, counterbalanced, within-subject field study with N = 24 participants to assess the impact of these artifacts on physical activity, reflection, and motivation. We collected quantitative data on physical activity and administered daily and weekly questionnaires, employing individual Likert items and standardized instruments, as well as conducted interviews post-prototype usage. Our findings indicate that while both artifacts act as reminders for physical activity, the physical artifact was superior in terms of user engagement. The study revealed that this can be attributed to the higher perceived presence and, thereby, enhanced social interaction, which acts as a motivational source for activity. In this sense, situated artifacts gently nudge toward sustainable health behavior change.

- Keppel, Jonas; Strauss, Marvin; Zhang, Shuoheng; Stroehnisch, Markus; Lewin, Stefan; Gruenefeld, Uwe; Degraen, Donald; Goedicke, David; Matviienko, Andrii; Schneegass, Stefan: The Impact of Bike-Based Controllers and Adaptive Feedback on Immersion and Enjoyment in a Virtual Reality Cycling Exergame. In: Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, 2025. doi:10.1145/3706599.3720096Kurzfassung Details BIB Download

Cycling exergames can increase enjoyment and promote high energy expenditure, making exercise more engaging and, therefore, supporting healthier lifestyles. To improve player experience in a virtual reality cycling exergame using a stationary bike, we investigated how different input and output techniques affect player engagement. We implemented a bike-based controller integrating button and shoulder-lean steering as input, combined with or without adaptive changes in bike inclination and resistance as output. The results of our study with 24 participants indicate that adaptive modes increase effort and perceived exertion. While button steering provides better pragmatic quality, shoulder-lean steering offers a more hedonic experience but requires more skill and effort. Still, this greater enjoyment fosters higher engagement, particularly when players enter a flow state where the increased physical demands become less noticeable. These findings underscore the potential of bike-based adaptive controllers to maximize player engagement and enhance VR cycling exergame experiences.

- Wald, Iddo Yehoshua; Degraen, Donald; Maimon, Amber; Keppel, Jonas; Schneegass, Stefan; Malaka, Rainer: Demonstrating Spatial Haptics: A Sensory Substitution Method for Distal Object Detection Using Tactile Cues. In: Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, 2025. doi:10.1145/3706599.3721281Kurzfassung Details BIB Download

We present Spatial Haptics, a sensory substitution method for representing locations of remote objects in 3D space via haptics. Spatial Haptics imitates auditory localization processes to enable vibrotactile localization abilities similar to those of some animal species. Two implementations of the localization method were developed, that modulate the vibration amplitude of the controllers relative to a target object in Virtual Reality. In Ear-Based Localization, vibrations are modulated based on the relative locations of the ears to the target, while in Hand-Based Localization, the amplitude is determined based on the relative locations of the hands to the target. In this interactive demonstration, users can experience the vibrotactile localization approaches in an interactive VR mini-game. Their task is to locate the target object in a scene consisting of multiple moving objects. By experiencing spatial localization using haptics hands-on, participants can evaluate the benefits of this sensory substitution approach for detecting distal objects.

- Wald, Iddo Yehoshua; Degraen⁎, Donald; Maimon⁎, Amber; Keppel, Jonas; Schneegass, Stefan; Malaka, Rainer: Spatial Haptics: A Sensory Substitution Method for Distal Object Detection Using Tactile Cues. In: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, 2025. doi:10.1145/3706598.3714083Kurzfassung Details BIB Download

We present a sensory substitution-based method for representing locations of remote objects in 3D space via haptics. By imitating auditory localization processes, we enable vibrotactile localization abilities similar to those of some spiders, elephants, and other species. We evaluated this concept in virtual reality by modulating the vibration amplitude of two controllers depending on relative locations to a target. We developed two implementations applying this method using either ear or hand locations. A proof-of-concept study assessed localization performance and user experience, achieving under 30° differentiation between horizontal targets with no prior training. This unique approach enables localization by using only two actuators, requires low computational power, and could potentially assist users in gaining spatial awareness in challenging environments. We compare the implementations and discuss the use of hands as ears in motion, a novel technique not previously explored in the sensory substitution literature.

- Keppel, Jonas; Strauss, Marvin; Gruenefeld, Uwe; Schneegass, Stefan: Magic Mirror: Designing a Weight Change Visualization for Domestic Use. In: Proc. ACM Hum.-Comput. Interact., Jg.8 (2024). doi:10.1145/3698149Kurzfassung Details BIB Download

Virtual mirrors displaying weight changes can support users in forming healthier habits by visualizing potential future body shapes. However, these often come with privacy, feasibility, and cost limitations. This paper introduces the Magic Mirror, a novel distortion-based mirror that leverages curvature effects to alter the appearance of body size while preserving privacy. We constructed the Magic Mirror and compared it to a video-based alternative. In an online study (N=115), we determined the optimal parameters for each system, comparing weight change visualizations and manipulation levels. Afterward, we conducted a laboratory study (N=24) to compare the two systems in terms of user perception, motivational potential, and willingness to use daily. Our findings indicate that the Magic Mirror surpasses the video-based mirror in terms of suitability for residential application, as it addresses feasibility concerns commonly associated with virtual mirrors. Our work demonstrates that mirrors that display weight changes can be implemented in users’ homes without any cameras, ensuring privacy.

- Keppel, Jonas; Schneegass, Stefan: Buying vs. Building: Can Money Fix Everything When Prototyping in CyclingHCI for Sports?. CHI 2024, Honolulu, Hawai'i, 2024. KurzfassungPDF Details VolltextBIB Download

This position paper explores the dichotomy of building versus buying in the domain of Cycling Human-Computer Interaction (CyclingHCI) for sports applications. Our research focuses on indoor cycling to enhance physical activity through sports motivation. Recognizing that the social component is crucial for many users to sustain regular sports engagement, we investigate the integration of social elements within indoor cycling topics. We outline two approaches: First, we utilize Virtual Reality (VR) Exergames using a bike-based controller as input and actuator aiming for immersive experiences and, therefore, buying a commercial smartbike, the Wahoo KickR Bike. Second, we enhance traditional spinning classes with interactive technology to foster social components during courses, building self-made sensors for attachment to mechanical indoor bikes. Finally, we discuss the challenges and benefits of constructing custom sensors versus purchasing commercial bike trainers. Our lessons learned contribute to informed decision-making in CyclingHCI prototyping, balancing innovation with practicality.

- Pascher, Max; Saad, Alia; Liebers, Jonathan; Heger, Roman; Gerken, Jens; Schneegass, Stefan; Gruenefeld, Uwe: Hands-On Robotics: Enabling Communication Through Direct Gesture Control. In: Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI '24 Companion), March 11--14, 2024, Boulder, CO, USA. ACM, Boulder, Colorado, USA, 2024. doi:10.1145/3610978.3640635KurzfassungPDF Details BIB Download

Effective Human-Robot Interaction (HRI) is fundamental to seamlessly integrating robotic systems into our daily lives. However, current communication modes require additional technological interfaces, which can be cumbersome and indirect. This paper presents a novel approach, using direct motion-based communication by moving a robot's end effector. Our strategy enables users to communicate with a robot by using four distinct gestures -- two handshakes ('formal' and 'informal') and two letters ('W' and 'S'). As a proof-of-concept, we conducted a user study with 16 participants, capturing subjective experience ratings and objective data for training machine learning classifiers. Our findings show that the four different gestures performed by moving the robot's end effector can be distinguished with close to 100% accuracy. Our research offers implications for the design of future HRI interfaces, suggesting that motion-based interaction can empower human operators to communicate directly with robots, removing the necessity for additional hardware.

- Liebers, Carina; Pfützenreuter, Niklas; Auda, Jonas; Gruenefeld, Uwe; Schneegass, Stefan: "Computer, Generate!” – Investigating User Controlled Generation of Immersive Virtual Environments. In: HHAI 2024: Hybrid Human AI Systems for the Social Good. IOS Press, Malmö, 2024, S. 213-227. doi:10.3233/FAIA240196KurzfassungPDF Details VolltextBIB Download

For immersive experiences such as virtual reality, explorable worlds are often fundamental. Generative artificial intelligence looks promising to accelerate the creation of such environments. However, it remains unclear how existing interaction modalities can support user-centered world generation and how users remain in control of the process. Thus, in this paper, we present a virtual reality application to generate virtual environments and compare three common interaction modalities (voice, controller, and hands) in a pre-study (N = 18), revealing a combination of initial voice input and continued controller manipulation as best suitable. We then investigate three levels of process control (all-at-once, creation-before-manipulation, and step-by-step) in a user study (N = 27). Our results show that although all-at-once reduced the number of object manipulations, participants felt more in control when using the step-by-step approach.

- Ivezić, Dijana; Keppel, Jonas; Horneber, David; Becker, Christine; Laumer, Sven; Walle, Hardy; Schneegass, Stefan; Amft, Oliver: EghiFit: Smartphone based Behaviour Monitoring and Health Recommendation in a Weight Loss Intervention Study. In: F1000Research, Jg.13 (2024), S. 1347. Details VolltextBIB Download

- Keppel, Jonas; Ivezić, Dijana; Gruenefeld, Uwe; Lukowicz, Paul; Amft, Oliver; Schneegass, Stefan: AI and Health: Using Digital Twins to Foster Healthy Behavior. In: Mensch und Computer 2024-Workshopband. 2024, S. 10-18420. doi:10.18420/muc2024-mci-ws05-114 Details BIB Download

- Liebers, Carina; Pfützenreuter, Niklas; Prochazka, Marvin; Megarajan, Pranav; Furuno, Eike; Löber, Jan; Stratmann, Tim C.; Auda, Jonas; Degraen, Donald; Gruenefeld, Uwe; Schneegass, Stefan: Look Over Here! Comparing Interaction Methods for User-Assisted Remote Scene Reconstruction. In: Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, 2024. doi:10.1145/3613905.3650982KurzfassungPDF Details BIB Download

Detailed digital representations of physical scenes are key in many cases, such as historical site preservation or hazardous area inspection. To automate the capturing process, robots or drones mounted with sensors can algorithmically record the environment from different viewpoints. However, environmental complexities often lead to incomplete captures. We believe humans can support scene capture as their contextual understanding enables easy identification of missing areas and recording errors. Therefore, they need to perceive the recordings and suggest new sensor poses. In this work, we compare two human-centric approaches in Virtual Reality for scene reconstruction through the teleoperation of a remote robot arm, i.e., directly providing sensor poses (direct method) or specifying missing areas in the scans (indirect method). Our results show that directly providing sensor poses leads to higher efficiency and user experience. In future work, we aim to compare the quality of human assistance to automatic approaches.

- Ivezić, Dijana; Keppel, Jonas; Schneegass, Stefan; Amft, Oliver: Patient Adherence and Challenges in a Weight Loss Study: Smartphone Data Stream and Gamification. Gesellschaft für Informatik e.V., 2024. doi:10.18420/muc2024-mci-ws05-222Kurzfassung Details BIB Download

This paper presents findings from our implementation of a context aware health guidance system for obese individuals, with a focus on smartphone- and smartwatch-based health monitoring and participant adherence. To aid participants in weight loss, our system utilizes data from wearables and smartphones, integrating nutrition tracking and gamification elements into a smartphone application and a web-based health dashboard for health coaches. Eight participants completed a 120-day field study to evaluate the system and examine user adherence to health monitoring and the effectiveness of gamification in a weight loss program. Data on steps, sleep, heart rate, weather, and manually logged meals were collected. Adherence varied across data types, with step counts being the most consistently collected, while sleep and heart rate data were limited due to inconsistent smartwatch usage.

- Liebers, Carina; Megarajan, Pranav; Auda, Jonas; Stratmann, Tim C; Pfingsthorn, Max; Gruenefeld, Uwe; Schneegass, Stefan: Keep the Human in the Loop: Arguments for Human Assistance in the Synthesis of Simulation Data for Robot Training. In: Multimodal Technologies and Interaction, Jg.8 (2024), S. 18. doi:10.3390/mti8030018KurzfassungPDF Details VolltextBIB Download

Robot training often takes place in simulated environments, particularly with reinforcement learning. Therefore, multiple training environments are generated using domain randomization to ensure transferability to real-world applications and compensate for unknown real-world states. We propose improving domain randomization by involving human application experts in various stages of the training process. Experts can provide valuable judgments on simulation realism, identify missing properties, and verify robot execution. Our human-in-the-loop workflow describes how they can enhance the process in five stages: validating and improving real-world scans, correcting virtual representations, specifying application-specific object properties, verifying and influencing simulation environment generation, and verifying robot training. We outline examples and highlight research opportunities. Furthermore, we present a case study in which we implemented different prototypes, demonstrating the potential of human experts in the given stages. Our early insights indicate that human input can benefit robot training at different stages.

- Faltaous, Sarah; Williamson, Julie R.; Koelle, Marion; Pfeiffer, Max; Keppel, Jonas; Schneegass, Stefan: Understanding User Acceptance of Electrical Muscle Stimulation in Human-Computer Interaction. In: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, 2024. doi:10.1145/3613904.3642585Kurzfassung Details BIB Download

Electrical Muscle Stimulation (EMS) has unique capabilities that can manipulate users’ actions or perceptions, such as actuating user movement while walking, changing the perceived texture of food, and guiding movements for a user learning an instrument. These applications highlight the potential utility of EMS, but such benefits may be lost if users reject EMS. To investigate user acceptance of EMS, we conducted an online survey (N = 101). We compared eight scenarios, six from HCI research applications and two from the sports and health domain. To gain further insights, we conducted in-depth interviews with a subset of the survey respondents (N = 10). The results point to the challenges and potential of EMS regarding social and technological acceptance, showing that there is greater acceptance of applications that manipulate action than those that manipulate perception. The interviews revealed safety concerns and user expectations for the design and functionality of future EMS applications.

- Saad, Alia; Pascher, Max; Kassem, Khaled; Heger, Roman; Liebers, Jonathan; Schneegass, Stefan; Gruenefeld, Uwe: Hand-in-Hand: Investigating Mechanical Tracking for User Identification in Cobot Interaction. In: Proceedings of International Conference on Mobile and Ubiquitous Multimedia (MUM). Vienna, Austria, 2023. doi:10.1145/3626705.3627771KurzfassungPDF Details BIB Download

Robots play a vital role in modern automation, with applications in manufacturing and healthcare. Collaborative robots integrate human and robot movements. Therefore, it is essential to ensure that interactions involve qualified, and thus identified, individuals. This study delves into a new approach: identifying individuals through robot arm movements. Different from previous methods, users guide the robot, and the robot senses the movements via joint sensors. We asked 18 participants to perform six gestures, revealing the potential use as unique behavioral traits or biometrics, achieving F1-score up to 0.87, which suggests direct robot interactions as a promising avenue for implicit and explicit user identification.

- Auda, Jonas; Grünefeld, Uwe; Faltaous, Sarah; Mayer, Sven; Schneegass, Stefan: A Scoping Survey on Cross-reality Systems. In: ACM Computing Surveys. 2023. doi:10.1145/3616536Kurzfassung Details BIB Download

Immersive technologies such as Virtual Reality (VR) and Augmented Reality (AR) empower users to experience digital realities. Known as distinct technology classes, the lines between them are becoming increasingly blurry with recent technological advancements. New systems enable users to interact across technology classes or transition between them—referred to as cross-reality systems. Nevertheless, these systems are not well understood. Hence, in this article, we conducted a scoping literature review to classify and analyze cross-reality systems proposed in previous work. First, we define these systems by distinguishing three different types. Thereafter, we compile a literature corpus of 306 relevant publications, analyze the proposed systems, and present a comprehensive classification, including research topics, involved environments, and transition types. Based on the gathered literature, we extract nine guiding principles that can inform the development of cross-reality systems. We conclude with research challenges and opportunities.

- Auda, Jonas; Grünefeld, Uwe; Mayer, Sven; Faltaous, Sarah; Schneegass, Stefan: The Actuality-Time Continuum: Visualizing Interactions and Transitions Taking Place in Cross-Reality Systems. In: IEEE ISMAR 2023. Sydney, 2023. Kurzfassung Details VolltextBIB Download

In the last decade, researchers contributed an increasing number of cross-reality systems and their evaluations. Going beyond individual technologies such as Virtual or Augmented Reality, these systems introduce novel approaches that help to solve relevant problems such as the integration of bystanders or physical objects. However, cross-reality systems are complex by nature, and describing the interactions and transitions taking place is a challenging task. Thus, in this paper, we propose the idea of the Actuality-Time Continuum that aims to enable researchers and designers alike to visualize complex cross-reality experiences. Moreover, we present four visualization examples that illustrate the potential of our proposal and conclude with an outlook on future perspectives.

- Keppel, Jonas; Strauss, Marvin; Faltaous, Sarah; Liebers, Jonathan; Heger, Roman; Gruenefeld, Uwe; Schneegass, Stefan: Don't Forget to Disinfect: Understanding Technology-Supported Hand Disinfection Stations. In: Proc. ACM Hum.-Comput. Interact., Jg.7 (2023). doi:10.1145/3604251KurzfassungPDF Details BIB Download

The global COVID-19 pandemic created a constant need for hand disinfection. While it is still essential, disinfection use is declining with the decrease in perceived personal risk (e.g., as a result of vaccination). Thus this work explores using different visual cues to act as reminders for hand disinfection. We investigated different public display designs using (1) paper-based only, adding (2) screen-based, or (3) projection-based visual cues. To gain insights into these designs, we conducted semi-structured interviews with passersby (N=30). Our results show that the screen- and projection-based conditions were perceived as more engaging. Furthermore, we conclude that the disinfection process consists of four steps that can be supported: drawing attention to the disinfection station, supporting the (subconscious) understanding of the interaction, motivating hand disinfection, and performing the action itself. We conclude with design implications for technology-supported disinfection.

- Liebers, Carina; Prochazka, Marvin; Pfützenreuter, Niklas; Liebers, Jonathan; Auda, Jonas; Gruenefeld, Uwe; Schneegass, Stefan: Pointing It out! Comparing Manual Segmentation of 3D Point Clouds between Desktop, Tablet, and Virtual Reality. In: International Journal of Human–Computer Interaction (2023), S. 1-15. doi:10.1080/10447318.2023.2238945KurzfassungPDF Details VolltextBIB Download

Scanning everyday objects with depth sensors is the state-of-the-art approach to generating point clouds for realistic 3D representations. However, the resulting point cloud data suffers from outliers and contains irrelevant data from neighboring objects. To obtain only the desired 3D representation, additional manual segmentation steps are required. In this paper, we compare three different technology classes as independent variables (desktop vs. tablet vs. virtual reality) in a within-subject user study (N = 18) to understand their effectiveness and efficiency for such segmentation tasks. We found that desktop and tablet still outperform virtual reality regarding task completion times, while we could not find a significant difference between them in the effectiveness of the segmentation. In the post hoc interviews, participants preferred the desktop due to its familiarity and temporal efficiency and virtual reality due to its given three-dimensional representation.

- Pascher, Max; Grünefeld, Uwe; Schneegass, Stefan; Gerken, Jens: How to Communicate Robot Motion Intent: A Scoping Review. In: Acm (Hrsg.): Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23). 2023. doi:10.1145/3544548.3580857KurzfassungPDF Details VolltextBIB Download

Robots are becoming increasingly omnipresent in our daily lives, supporting us and carrying out autonomous tasks. In Human-Robot Interaction, human actors benefit from understanding the robot's motion intent to avoid task failures and foster collaboration. Finding effective ways to communicate this intent to users has recently received increased research interest. However, no common language has been established to systematize robot motion intent. This work presents a scoping review aimed at unifying existing knowledge. Based on our analysis, we present an intent communication model that depicts the relationship between robot and human through different intent dimensions (intent type, intent information, intent location). We discuss these different intent dimensions and their interrelationships with different kinds of robots and human roles. Throughout our analysis, we classify the existing research literature along our intent communication model, allowing us to identify key patterns and possible directions for future research.

- Pascher, Max; Franzen, Til; Kronhardt, Kirill; Grünefeld, Uwe; Schneegass, Stefan; Gerken, Jens: HaptiX: Vibrotactile Haptic Feedback for Communication of 3D Directional Cues. In: Acm (Hrsg.): Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems - Extended Abstract (CHI ’23). 2023. doi:10.1145/3544549.3585601KurzfassungPDF Details VolltextBIB Download

In Human-Computer-Interaction, vibrotactile haptic feedback offers the advantage of being independent of any visual perception of the environment. Most importantly, the user's field of view is not obscured by user interface elements, and the visual sense is not unnecessarily strained. This is especially advantageous when the visual channel is already busy, or the visual sense is limited. We developed three design variants based on different vibrotactile illusions to communicate 3D directional cues. In particular, we explored two variants based on the vibrotactile illusion of the cutaneous rabbit and one based on apparent vibrotactile motion. To communicate gradient information, we combined these with pulse-based and intensity-based mapping. A subsequent study showed that the pulse-based variants based on the vibrotactile illusion of the cutaneous rabbit are suitable for communicating both directional and gradient characteristics. The results further show that a representation of 3D directions via vibrations can be effective and beneficial.

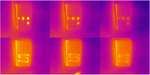

- Saad, Alia; Izadi, Kian; Ahmad Khan, Anam; Knierim, Pascal; Schneegass, Stefan; Alt, Florian; Abdelrahman, Yomna: HotFoot: Foot-Based User Identification Using Thermal Imaging. In: Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, 2023. doi:10.1145/3544548.3580924Kurzfassung Details BIB Download

We propose a novel method for seamlessly identifying users by combining thermal and visible feet features. While it is known that users’ feet have unique characteristics, these have so far been underutilized for biometric identification, as observing those features often requires the removal of shoes and socks. As thermal cameras are becoming ubiquitous, we foresee a new form of identification, using feet features and heat traces to reconstruct the footprint even while wearing shoes or socks. We collected a dataset of users’ feet (N = 21), wearing three types of footwear (personal shoes, standard shoes, and socks) on three floor types (carpet, laminate, and linoleum). By combining visual and thermal features, an AUC between 91.1% and 98.9%, depending on floor type and shoe type can be achieved, with personal shoes on linoleum floor performing best. Our findings demonstrate the potential of thermal imaging for continuous and unobtrusive user identification.

- Keppel, Jonas; Gruenefeld, Uwe; Strauss, Marvin; Gonzalez, Luis Ignacio Lopera; Amft, Oliver; Schneegass, Stefan: Reflecting on Approaches to Monitor User's Dietary Intake. MobileHCI 2022, Vancouver, Canada, 2022. KurzfassungPDF Details VolltextBIB Download

Monitoring dietary intake is essential to providing user feedback and achieving a healthier lifestyle. In the past, different approaches for monitoring dietary behavior have been proposed. In this position paper, we first present an overview of the state-of-the-art techniques grouped by image- and sensor-based approaches. After that, we introduce a case study in which we present a Wizard-of-Oz approach as an alternative and non-automatic monitoring method.

- Detjen, Henrik; Faltaous, Sarah; Keppel, Jonas; Prochazka, Marvin; Gruenefeld, Uwe; Sadeghian, Shadan; Schneegass, Stefan: Investigating the Influence of Gaze- and Context-Adaptive Head-up Displays on Take-Over Requests. In: Acm (Hrsg.): AutomotiveUI '22: Proceedings of the 14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications. 2022. doi:10.1145/3543174.3546089Kurzfassung Details VolltextBIB Download

In Level 3 automated vehicles, preparing drivers for take-over requests (TORs) on the head-up display (HUD) requires their repeated attention. Visually salient HUD elements can distract attention from potentially critical parts in a driving scene during a TOR. Further, attention is (a) meanwhile needed for non-driving-related activities and can (b) be over-requested. In this paper, we conduct a driving simulator study (N=12), varying required attention by HUD warning presence (absent vs. constant vs. TOR-only) across gaze-adaptivity (with vs. without) to fit warnings to the situation. We found that (1) drivers value visual support during TORs, (2) gaze-adaptive scene complexity reduction works but creates a benefit-neutralizing distraction for some, and (3) drivers perceive constant HUD warnings as annoying and distracting over time. Our findings highlight the need for (a) HUD adaptation based on user activities and potential TORs and (b) sparse use of warning cues in future HUD designs.

- Faltaous, Sarah; Prochazka, Marvin; Auda, Jonas; Keppel, Jonas; Wittig, Nick; Gruenefeld, Uwe; Schneegass, Stefan: Give Weight to VR: Manipulating Users’ Perception of Weight in Virtual Reality with Electric Muscle Stimulation. Association for Computing Machinery, New York, NY, USA, 2022. doi:10.1145/3543758.3547571Kurzfassung Details BIB Download

Virtual Reality (VR) devices empower users to experience virtual worlds through rich visual and auditory sensations. However, believable haptic feedback that communicates the physical properties of virtual objects, such as their weight, is still unsolved in VR. The current trend towards hand tracking-based interactions, neglecting the typical controllers, further amplifies this problem. Hence, in this work, we investigate the combination of passive haptics and electric muscle stimulation to manipulate users’ perception of weight, and thus, simulate objects with different weights. In a laboratory user study, we investigate four differing electrode placements, stimulating different muscles, to determine which muscle results in the most potent perception of weight with the highest comfort. We found that actuating the biceps brachii or the triceps brachii muscles increased the weight perception of the users. Our findings lay the foundation for future investigations on weight perception in VR.

- Grünefeld, Uwe; Geilen, Alexander; Liebers, Jonathan; Wittig, Nick; Koelle, Marion; Schneegass, Stefan: ARm Haptics: 3D-Printed Wearable Haptics for Mobile Augmented Reality. In: Proc. ACM Hum.-Comput. Interact., Jg.6 (2022). doi:10.1145/3546728Kurzfassung Details BIB Download

Augmented Reality (AR) technology enables users to superpose virtual content onto their environments. However, interacting with virtual content while mobile often requires users to perform interactions in mid-air, resulting in a lack of haptic feedback. Hence, in this work, we present the ARm Haptics system, which is worn on the user's forearm and provides 3D-printed input modules, each representing well-known interaction components such as buttons, sliders, and rotary knobs. These modules can be changed quickly, thus allowing users to adapt them to their current use case. After an iterative development of our system, which involved a focus group with HCI researchers, we conducted a user study to compare the ARm Haptics system to hand-tracking-based interaction in mid-air (baseline). Our findings show that using our system results in significantly lower error rates for slider and rotary input. Moreover, use of the ARm Haptics system results in significantly higher pragmatic quality and lower effort, frustration, and physical demand. Following our findings, we discuss opportunities for haptics worn on the forearm.

- Grünefeld, Uwe; Auda, Jonas; Mathis, Florian; Schneegass, Stefan; Khamis, Mohamed; Gugenheimer, Jan; Mayer, Sven: VRception: Rapid Prototyping of Cross-Reality Systems in Virtual Reality. In: Proceedings of the 41st ACM Conference on Human Factors in Computing Systems (CHI). Association for Computing Machinery, New Orleans, United States, 2022. doi:10.1145/3491102.3501821Kurzfassung Details BIB Download

Cross-reality systems empower users to transition along the realityvirtuality continuum or collaborate with others experiencing different manifestations of it. However, prototyping these systems is challenging, as it requires sophisticated technical skills, time, and often expensive hardware. We present VRception, a concept and toolkit for quick and easy prototyping of cross-reality systems. By simulating all levels of the reality-virtuality continuum entirely in Virtual Reality, our concept overcomes the asynchronicity of realities, eliminating technical obstacles. Our VRception Toolkit leverages this concept to allow rapid prototyping of cross-reality systems and easy remixing of elements from all continuum levels. We replicated six cross-reality papers using our toolkit and presented them to their authors. Interviews with them revealed that our toolkit sufficiently replicates their core functionalities and allows quick iterations. Additionally, remote participants used our toolkit in pairs to collaboratively implement prototypes in about eight minutes that they would have otherwise expected to take days.

- Auda, Jonas; Grünefeld, Uwe; Schneegass, Stefan: If The Map Fits! Exploring Minimaps as Distractors from Non-Euclidean Spaces in Virtual Reality. In: CHI 22. ACM, 2022. doi:10.1145/3491101.3519621 Details BIB Download

- Auda, Jonas; Grünefeld, Uwe; Kosch, Thomas; Schneegass, Stefan: The Butterfly Effect: Novel Opportunities for Steady-State Visually-Evoked Potential Stimuli in Virtual Reality. In: Researchgate (Hrsg.): Augmented Humans. Kashiwa, Chiba, Japan, 2022. doi:10.1145/3519391.3519397 Details BIB Download

- Liebers, Jonathan; Brockel, Sascha; Gruenefeld, Uwe; Schneegass, Stefan: Identifying Users by Their Hand Tracking Data in Augmented and Virtual Reality. In: International Journal of Human–Computer Interaction (2022). doi:10.1080/10447318.2022.2120845KurzfassungPDF Details BIB Download

Nowadays, Augmented and Virtual Reality devices are widely available and are often shared among users due to their high cost. Thus, distinguishing users to offer personalized experiences is essential. However, currently used explicit user authentication(e.g., entering a password) is tedious and vulnerable to attack. Therefore, this work investigates the feasibility of implicitly identifying users by their hand tracking data. In particular, we identify users by their uni- and bimanual finger behavior gathered from their interaction with eight different universal interface elements, such as buttons and sliders. In two sessions, we recorded the tracking data of 16 participants while they interacted with various interface elements in Augmented and Virtual Reality. We found that user identification is possible with up to 95 % accuracy across sessions using an explainable machine learning approach. We conclude our work by discussing differences between interface elements, and feature importance to provide implications for behavioral biometric systems.

- Keppel, Jonas; Liebers, Jonathan; Auda, Jonas; Gruenefeld, Uwe; Schneegass, Stefan: ExplAInable Pixels: Investigating One-Pixel Attacks on Deep Learning Models with Explainable Visualizations. In: Proceedings of the 21st International Conference on Mobile and Ubiquitous Multimedia. Association for Computing Machinery, New York, NY, USA, 2022, S. 231-242. doi:10.1145/3568444.3568469Kurzfassung Details BIB Download

Nowadays, deep learning models enable numerous safety-critical applications, such as biometric authentication, medical diagnosis support, and self-driving cars. However, previous studies have frequently demonstrated that these models are attackable through slight modifications of their inputs, so-called adversarial attacks. Hence, researchers proposed investigating examples of these attacks with explainable artificial intelligence to understand them better. In this line, we developed an expert tool to explore adversarial attacks and defenses against them. To demonstrate the capabilities of our visualization tool, we worked with the publicly available CIFAR-10 dataset and generated one-pixel attacks. After that, we conducted an online evaluation with 16 experts. We found that our tool is usable and practical, providing evidence that it can support understanding, explaining, and preventing adversarial examples.

- Liebers, Jonathan; Horn, Patrick; Burschik, Christian; Gruenefeld, Uwe; Schneegass, Stefan: Using Gaze Behavior and Head Orientation for Implicit Identification in Virtual Reality. In: Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology (VRST). Association for Computing Machinery, Osaka, Japan, 2021. doi:10.1145/3489849.3489880Kurzfassung Details BIB Download

Identifying users of a Virtual Reality (VR) headset provides designers of VR content with the opportunity to adapt the user interface, set user-specific preferences, or adjust the level of difficulty either for games or training applications. While most identification methods currently rely on explicit input, implicit user identification is less disruptive and does not impact the immersion of the users. In this work, we introduce a biometric identification system that employs the user’s gaze behavior as a unique, individual characteristic. In particular, we focus on the user’s gaze behavior and head orientation while following a moving stimulus. We verify our approach in a user study. A hybrid post-hoc analysis results in an identification accuracy of up to 75% for an explainable machine learning algorithm and up to 100% for a deep learning approach. We conclude with discussing application scenarios in which our approach can be used to implicitly identify users.

- Auda, Jonas; Mayer, Sven; Verheyen, Nils; Schneegass, Stefan: Flyables: Haptic Input Devices for Virtual Reality using Quadcopters. In: ResearchGate (Hrsg.): VRST. 2021. doi:10.1145/3489849.3489855 Details BIB Download

- Auda, Jonas; Grünefeld, Uwe; Pfeuffer, Ken; Rivu, Radiah; Florian, Alt; Schneegass, Stefan: I'm in Control! Transferring Object Ownership Between Remote Users with Haptic Props in Virtual Reality. In: Proceedings of the 9th ACM Symposium on Spatial User Interaction (SUI). Association for Computing Machinery, 2021. doi:10.1145/3485279.3485287 Details BIB Download

- Saad, Alia; Liebers, Jonathan; Gruenefeld, Uwe; Alt, Florian; Schneegass, Stefan: Understanding Bystanders’ Tendency to Shoulder Surf Smartphones Using 360-Degree Videos in Virtual Reality. In: Proceedings of the 23rd International Conference on Mobile Human-Computer Interaction (MobileHCI). Association for Computing Machinery, Toulouse, France, 2021. doi:10.1145/3447526.3472058Kurzfassung Details BIB Download

Shoulder surfing is an omnipresent risk for smartphone users. However, investigating these attacks in the wild is difficult because of either privacy concerns, lack of consent, or the fact that asking for consent would influence people’s behavior (e.g., they could try to avoid looking at smartphones). Thus, we propose utilizing 360-degree videos in Virtual Reality (VR), recorded in staged real-life situations on public transport. Despite differences between perceiving videos in VR and experiencing real-world situations, we believe this approach to allow novel insights on observers’ tendency to shoulder surf another person’s phone authentication and interaction to be gained. By conducting a study (N=16), we demonstrate that a better understanding of shoulder surfers’ behavior can be obtained by analyzing gaze data during video watching and comparing it to post-hoc interview responses. On average, participants looked at the phone for about 11% of the time it was visible and could remember half of the applications used.

- Auda, Jonas; Grünefeld, Uwe; Schneegass, Stefan: Enabling Reusable Haptic Props for Virtual Reality by Hand Displacement. In: Proceedings of the Conference on Mensch Und Computer (MuC). Association for Computing Machinery, Ingolstadt, Germany, 2021, S. 412-417. doi:10.1145/3473856.3474000Kurzfassung Details BIB Download

Virtual Reality (VR) enables compelling visual experiences. However, providing haptic feedback is still challenging. Previous work suggests utilizing haptic props to overcome such limitations and presents evidence that props could function as a single haptic proxy for several virtual objects. In this work, we displace users’ hands to account for virtual objects that are smaller or larger. Hence, the used haptic prop can represent several differently-sized virtual objects. We conducted a user study (N = 12) and presented our participants with two tasks during which we continuously handed them the same haptic prop but they saw in VR differently-sized virtual objects. In the first task, we used a linear hand displacement and increased the size of the virtual object to understand when participants perceive a mismatch. In the second task, we compare the linear displacement to logarithmic and exponential displacements. We found that participants, on average, do not perceive the size mismatch for virtual objects up to 50% larger than the physical prop. However, we did not find any differences between the explored different displacement. We conclude our work with future research directions.

- Faltaous, Sarah; Gruenefeld, Uwe; Schneegass, Stefan: Towards a Universal Human-Computer Interaction Model for Multimodal Interactions. In: Proceedings of the Conference on Mensch Und Computer (MuC). Association for Computing Machinery, Ingolstadt, Germany, 2021, S. 59-63. doi:10.1145/3473856.3474008Kurzfassung Details BIB Download

Models in HCI describe and provide insights into how humans use interactive technology. They are used by engineers, designers, and developers to understand and formalize the interaction process. At the same time, novel interaction paradigms arise constantly introducing new ways of how interactive technology can support humans. In this work, we look into how these paradigms can be described using the classical HCI model introduced by Schomaker in 1995. We extend this model by presenting new relations that would provide a better understanding of them. For this, we revisit the existing interaction paradigms and try to describe their interaction using this model. The goal of this work is to highlight the need to adapt the models to new interaction paradigms and spark discussion in the HCI community on this topic.

- Faltaous, Sarah; Janzon, Simon; Heger, Roman; Strauss, Marvin; Golkar, Pedram; Viefhaus, Matteo; Prochazka, Marvin; Gruenefeld, Uwe; Schneegass, Stefan: Wisdom of the IoT Crowd: Envisioning a Smart Home-Based Nutritional Intake Monitoring System. In: Proceedings of the Conference on Mensch Und Computer (MuC). Association for Computing Machinery, Ingolstadt, Germany, 2021, S. 568-573. doi:10.1145/3473856.3474009Kurzfassung Details BIB Download

Obesity and overweight are two factors linked to various health problems that lead to death in the long run. Technological advancements have granted the chance to create smart interventions. These interventions could be operated by the Internet of Things (IoT) that connects different smart home and wearable devices, providing a large pool of data. In this work, we use IoT with different technologies to present an exemplary nutrition monitoring intake system. This system integrates the input from various devices to understand the users’ behavior better and provide recommendations accordingly. Furthermore, we report on a preliminary evaluation through semi-structured interviews with six participants. Their feedback highlights the system’s opportunities and challenges.

- Auda, Jonas; Weigel, Martin; Cauchard, Jessica; Schneegass, Stefan: Understanding Drone Landing on the Human Body. In: ResearchGate (Hrsg.): 23rd International Conference on Mobile Human-Computer Interaction. 2021. doi:10.1145/3447526.3472031 Details BIB Download

- Auda, Jonas; Heger, Roman; Gruenefeld, Uwe; Schneegaß, Stefan: VRSketch: Investigating 2D Sketching in Virtual Reality with Different Levels of Hand and Pen Transparency. In: 18th International Conference on Human–Computer Interaction (INTERACT). Springer, Bari, Italy, 2021, S. 195-211. doi:10.1007/978-3-030-85607-6_14Kurzfassung Details BIB Download

Sketching is a vital step in design processes. While analog sketching on pen and paper is the defacto standard, Virtual Reality (VR) seems promising for improving the sketching experience. It provides myriads of new opportunities to express creative ideas. In contrast to reality, possible drawbacks of pen and paper drawing can be tackled by altering the virtual environment. In this work, we investigate how hand and pen transparency impacts users’ 2D sketching abilities. We conducted a lab study (N=20N=20) investigating different combinations of hand and pen transparency. Our results show that a more transparent pen helps one sketch more quickly, while a transparent hand slows down. Further, we found that transparency improves sketching accuracy while drawing in the direction that is occupied by the user’s hand.

- Arboleda, S. A.; Pascher, Max; Lakhnati, Y.; Gerken, Jens: Understanding Human-Robot Collaboration for People with Mobility Impairments at the Workplace, a Thematic Analysis. In: 29th IEEE International Conference on Robot and Human Interactive Communication. ACM, 2021. doi:10.1109/RO-MAN47096.2020.9223489.Kurzfassung Details BIB Download

Assistive technologies such as human-robot collaboration, have the potential to ease the life of people with physical mobility impairments in social and economic activities. Currently, this group of people has lower rates of economic participation, due to the lack of adequate environments adapted to their capabilities. We take a closer look at the needs and preferences of people with physical mobility impairments in a human-robot cooperative environment at the workplace. Specifically, we aim to design how to control a robotic arm in manufacturing tasks for people with physical mobility impairments. We present a case study of a sheltered-workshop as a prototype for an institution that employs people with disabilities in manufacturing jobs. Here, we collected data of potential end-users with physical mobility impairments, social workers, and supervisors using a participatory design technique (Future-Workshop). These stakeholders were divided into two groups, primary (end-users) and secondary users (social workers, supervisors), which were run across two separate sessions. The gathered information was analyzed using thematic analysis to reveal underlying themes across stakeholders. We identified concepts that highlight underlying concerns related to the robot fitting in the social and organizational structure, human-robot synergy, and human-robot problem management. In this paper, we present our findings and discuss the implications of each theme when shaping an inclusive human-robot cooperative workstation for people with physical mobility impairments.

- Liebers, Jonathan; Abdelaziz, Mark; Mecke, Lukas; Saad, Alia; Auda, Jonas; Alt, Florian; Schneegaß, Stefan: Understanding User Identification in Virtual Reality Through Behavioral Biometrics and the Effect of Body Normalization. In: Proceedings of the 40th ACM Conference on Human Factors in Computing Systems (CHI). Association for Computing Machinery, Yokohama, Japan, 2021. doi:10.1145/3411764.3445528Kurzfassung Details BIB Download

Virtual Reality (VR) is becoming increasingly popular both in the entertainment and professional domains. Behavioral biometrics have recently been investigated as a means to continuously and implicitly identify users in VR. Applications in VR can specifically benefit from this, for example, to adapt virtual environments and user interfaces as well as to authenticate users. In this work, we conduct a lab study (N = 16) to explore how accurately users can be identified during two task-driven scenarios based on their spatial movement. We show that an identification accuracy of up to 90% is possible across sessions recorded on different days. Moreover, we investigate the role of users’ physiology in behavioral biometrics by virtually altering and normalizing their body proportions. We find that body normalization in general increases the identification rate, in some cases by up to 38%; hence, it improves the performance of identification systems.

- Borsum, Florian; Pascher, Max; Auda, Jonas; Schneegass, Stefan; Lux, Gregor; Gerken, Jens: Stay on Course in VR: Comparing the Precision of Movement between Gamepad, Armswinger, and Treadmill: Kurs Halten in VR: Vergleich Der Bewegungspräzision von Gamepad, Armswinger Und Laufstall. In: Mensch Und Computer 2021. Association for Computing Machinery, New York, NY, USA, 2021, S. 354-365. doi:10.1145/3473856.3473880Kurzfassung Details BIB Download

In diesem Beitrag wird untersucht, inwieweit verschiedene Formen von Fortbewegungstechniken in Virtual Reality Umgebungen Einfluss auf die Präzision bei der Interaktion haben. Dabei wurden insgesamt drei Techniken untersucht: Zwei der Techniken integrieren dabei eine körperliche Aktivität, um einen hohen Grad an Realismus in der Bewegung zu erzeugen (Armswinger, Laufstall). Als dritte Technik wurde ein Gamepad als Baseline herangezogen. In einer Studie mit 18 Proband:innen wurde die Präzision dieser drei Fortbewegungstechniken über sechs unterschiedliche Hindernisse in einem VR-Parcours untersucht. Die Ergebnisse zeigen, dass für einzelne Hindernisse, die zum einen eine Kombination aus Vorwärts- und Seitwärtsbewegung erfordern (Slalom, Klippe) sowie auf Geschwindigkeit abzielen (Schiene), der Laufstall eine signifikant präzisere Steuerung ermöglicht als der Armswinger. Auf den gesamten Parcours gesehen ist jedoch kein Eingabegerät signifikant präziser als ein anderes. Die Benutzung des Laufstalls benötigt zudem signifikant mehr Zeit als Gamepad und Armswinger. Ebenso zeigte sich, dass das Ziel, eine reale Laufbewegung 1:1 abzubilden, auch mit einem Laufstall nach wie vor nicht erreicht wird, die Bewegung aber dennoch als intuitiv und immersiv wahrgenommen wird.

- Arevalo Arboleda, Stephanie; Pascher, Max; Baumeister, Annalies; Klein, Barbara; Gerken, Jens: Reflecting upon Participatory Design in Human-Robot Collaboration for People with Motor Disabilities: Challenges and Lessons Learned from Three Multiyear Projects. In: The 14th PErvasive Technologies Related to Assistive Environments Conference. Association for Computing Machinery, New York, NY, USA, 2021, S. 147-155. doi:10.1145/3453892.3458044Kurzfassung Details BIB Download

Human-robot technology has the potential to positively impact the lives of people with motor disabilities. However, current efforts have mostly been oriented towards technology (sensors, devices, modalities, interaction techniques), thus relegating the user and their valuable input to the wayside. In this paper, we aim to present a holistic perspective of the role of participatory design in Human-Robot Collaboration (HRC) for People with Motor Disabilities (PWMD). We have been involved in several multiyear projects related to HRC for PWMD, where we encountered different challenges related to planning and participation, preferences of stakeholders, using certain participatory design techniques, technology exposure, as well as ethical, legal, and social implications. These challenges helped us provide five lessons learned that could serve as a guideline to researchers when using participatory design with vulnerable groups. In particular, early-career researchers who are starting to explore HRC research for people with disabilities.

- Borsum, Florian; Pascher, Max; Auda, Jonas; Schneegass, Stefan; Lux, Gregor; Gerken, Jens: Stay on Course in VR: Comparing the Precision of Movement between Gamepad, Armswinger, and Treadmill: Kurs Halten in VR: Vergleich Der Bewegungspräzision von Gamepad, Armswinger Und Laufstall. Association for Computing Machinery, New York, NY, USA, 2021. doi:10.1145/3473856.3473880Kurzfassung Details BIB Download

In diesem Beitrag wird untersucht, inwieweit verschiedene Formen von Fortbewegungstechniken in Virtual Reality Umgebungen Einfluss auf die Präzision bei der Interaktion haben. Dabei wurden insgesamt drei Techniken untersucht: Zwei der Techniken integrieren dabei eine körperliche Aktivität, um einen hohen Grad an Realismus in der Bewegung zu erzeugen (Armswinger, Laufstall). Als dritte Technik wurde ein Gamepad als Baseline herangezogen. In einer Studie mit 18 Proband:innen wurde die Präzision dieser drei Fortbewegungstechniken über sechs unterschiedliche Hindernisse in einem VR-Parcours untersucht. Die Ergebnisse zeigen, dass für einzelne Hindernisse, die zum einen eine Kombination aus Vorwärts- und Seitwärtsbewegung erfordern (Slalom, Klippe) sowie auf Geschwindigkeit abzielen (Schiene), der Laufstall eine signifikant präzisere Steuerung ermöglicht als der Armswinger. Auf den gesamten Parcours gesehen ist jedoch kein Eingabegerät signifikant präziser als ein anderes. Die Benutzung des Laufstalls beötigt zudem signifikant mehr Zeit als Gamepad und Armswinger. Ebenso zeigte sich, dass das Ziel, eine reale Laufbewegung 1:1 abzubilden, auch mit einem Laufstall nach wie vor nicht erreicht wird, die Bewegung aber dennoch als intuitiv und immersiv wahrgenommen wird.

- Pascher, Max; Baumeister, Annalies; Schneegass, Stefan; Klein, Barbara; Gerken, Jens: Recommendations for the Development of a Robotic Drinking and Eating Aid - An Ethnographic Study. In: Ardito, Carmelo; Lanzilotti, Rosa; Malizia, Alessio; Petrie, Helen; Piccinno, Antonio; Desolda, Giuseppe; Inkpen, Kori (Hrsg.): Human-Computer Interaction -- INTERACT 2021. Springer International Publishing, Cham, 2021, S. 331-351. Kurzfassung Details BIB Download

Being able to live independently and self-determined in one's own home is a crucial factor or human dignity and preservation of self-worth. For people with severe physical impairments who cannot use their limbs for every day tasks, living in their own home is only possible with assistance from others. The inability to move arms and hands makes it hard to take care of oneself, e.g. drinking and eating independently. In this paper, we investigate how 15 participants with disabilities consume food and drinks. We report on interviews, participatory observations, and analyzed the aids they currently use. Based on our findings, we derive a set of recommendations that supports researchers and practitioners in designing future robotic drinking and eating aids for people with disabilities.

- Faltaous, Sarah; Neuwirth, Joshua; Gruenefeld, Uwe; Schneegass, Stefan: SaVR: Increasing Safety in Virtual Reality Environments via Electrical Muscle Stimulation. In: 19th International Conference on Mobile and Ubiquitous Multimedia (MUM). Association for Computing Machinery, Essen, Germany, 2020, S. 254-258. doi:10.1145/3428361.3428389Kurzfassung Details BIB Download

One of the main benefits of interactive Virtual Reality (VR) applications is that they provide a high sense of immersion. As a result, users lose their sense of real-world space which makes them vulnerable to collisions with real-world objects. In this work, we propose a novel approach to prevent such collisions using Electrical Muscle Stimulation (EMS). EMS actively prevents the movement that would result in a collision by actuating the antagonist muscle. We report on a user study comparing our approach to the commonly used feedback modalities: audio, visual, and vibro-tactile. Our results show that EMS is a promising modality for restraining user movement and, at the same time, rated best in terms of user experience.

- Detjen, Henrik; Geisler, Stefan; Schneegass, Stefan: "Help, Accident Ahead!": Using Mixed Reality Environments in Automated Vehicles to Support Occupants After Passive Accident Experiences. In: Acm (Hrsg.): AutomotiveUI (adjunct) 2020. 2020. doi:10.1145/3409251.3411723 Details BIB Download

- Detjen, Henrik; Pfleging, Bastian; Schneegass, Stefan: A Wizard of Oz Field Study to Understand Non-Driving-Related Activities, Trust, and Acceptance of Automated Vehicles. In: AutomotiveUI 2020. ACM, 2020. doi:10.1145/3409120.3410662 Details BIB Download

- Schneegaß, Stefan; Auda, Jonas; Heger, Roman; Grünefeld, Uwe; Kosch, Thomas: EasyEG: A 3D-printable Brain-Computer Interface. In: Proceedings of the 33rd ACM Symposium on User Interface Software and Technology (UIST). Minnesota, USA, 2020. doi:10.1145/3379350.3416189Kurzfassung Details BIB Download

Brain-Computer Interfaces (BCIs) are progressively adopted by the consumer market, making them available for a variety of use-cases. However, off-the-shelf BCIs are limited in their adjustments towards individual head shapes, evaluation of scalp-electrode contact, and extension through additional sensors. This work presents EasyEG, a BCI headset that is adaptable to individual head shapes and offers adjustable electrode-scalp contact to improve measuring quality. EasyEG consists of 3D-printed and low-cost components that can be extended by additional sensing hardware, hence expanding the application domain of current BCIs. We conclude with use-cases that demonstrate the potentials of our EasyEG headset.

- Poguntke, Romina; Schneegass, Christina; van der Vekens, Lucas; Rzayev, Rufat; Auda, Jonas; Schneegass, Stefan; Schmidt:, Albrecht: NotiModes: an investigation of notification delay modes and their effects on smartphone usersy. In: MuC '20: Proceedings of the Conference on Mensch und Computer. ACM, 2020. doi:10.1145/3404983.3410006 Details BIB Download

- Jonathan Liebers, Stefan Schneegass: Gaze-based Authentication in Virtual Reality. In: ETRA. ACM, 2020. doi:10.1145/3379157.3391421 Details BIB Download

- Agarwal, Shivam; Auda, Jonas; Schneegaß, Stefan; Beck:, Fabian: A Design and Application Space for Visualizing User Sessions of Virtual and Mixed Reality Environments. In: VMV2020. ACM, 2020. doi:10.2312/vmv.20201194 Details BIB Download

- Poguntke, Romina; Schneegass, Christina; van der Vekens, Lucas; Rzayev, Rufat; Auda, Jonas; Schneegass, Stefan; Schmidt, Albrecht: NotiModes: an investigation of notification delay modes and their effects on smartphone users. In: MuC '20: Proceedings of the Conference on Mensch und Computer. ACM, Magdebug, Germany, 2020. doi:10.1145/3404983.3410006 Details BIB Download

- Saad, Alia; Elkafrawy, Dina Hisham; Abdennadher, Slim; Schneegass, Stefan: Are They Actually Looking? Identifying Smartphones Shoulder Surfing Through Gaze Estimation. In: ETRA. ACM, Stuttgart, Germany, 2020. doi:10.1145/3379157.3391422 Details BIB Download

- Safwat, Sherine Ashraf; Bolock, Alia El; Alaa, Mostafa; Faltaous, Sarah; Schneegass, Stefan; Abdennadher, Slim: The Effect of Student-Lecturer Cultural Differences on Engagement in Learning Environments - A Pilot Study. In: Communications in Computer and Information Science. Springer, 2020. doi:10.1007/978-3-030-51999-5\_10 Details BIB Download

- Schneegass, Stefan; Sasse, Angela; Alt, Florian; Vogel, Daniel: Authentication Beyond Desktops and Smartphones: Novel Approaches for Smart Devices and Environments. In: CHI'20 Proceedings. ACM, Honolulu, HI, USA, 2020. doi:10.1145/3334480.3375144 Details BIB Download

- Ranasinghe, Champika; Holländer, Kai; Currano, Rebecca; Sirkin, David; Moore, Dylan; Schneegass, Stefan; Ju, Wendy: Autonomous Vehicle-Pedestrian Interaction Across Cultures: Towards Designing Better External Human Machine Interfaces (eHMIs). In: CHI'20 Proceedings. ACM, Honolulu, HI, USA, 2020. doi:10.1145/3334480.3382957 Details BIB Download

- Jonathan Liebers, Stefan Schneegass: Introducing Functional Biometrics: Using Body-Reflections as a Novel Class of Biometric Authentication Systems. In: CHI Extended Abstracts 2020. ACM, Honolulu, HI, USA, 2020. doi:10.1145/3334480.3383059 Details BIB Download

- Faltaous, Sarah; Eljaki, Salma; Schneegass, Stefan: User Preferences of Voice Controlled Smart Light Systems. In: MuC'19: Proceedings of Mensch und Computer 2019. ACM, New York, USA, 2019. doi:10.1145/3340764.3344437PDF Details BIB Download

- Detjen, Henrik; Faltaous, Sarah; Geisler, Stefan; Schneegass, Stefan: User-Defined Voice and Mid-Air Gesture Commands - for Maneuver-based Interventions in Automated Vehicles. In: MuC'19: Proceedings of Mensch und Computer 2019. ACM, New York, USA, 2019. doi:10.1145/3340764.3340798 PDF Details BIB Download

- Poguntke, Romina; Mantz, Tamara; Hassib, Mariam; Schmidt, Albrecht; Schneegass, Stefan: Smile to Me - Investigating Emotions and their Representation in Text-based Messaging in the Wild. In: MuC'19: Proceedings of Mensch und Computer 2019. ACM, New York, USA, 2019. doi:10.1145/3340764.3340795PDF Details BIB Download

- Pascher, Max; Schneegass, Stefan; Gerken:, Jens: SwipeBuddy A Teleoperated Tablet and Ebook-Reader Holder for a Hands-Free Interaction. In: Human-Computer Interaction – INTERACT 2019. Springer, Paphos, Cyprus, 2019. doi:10.1007/978-3-030-29390-1_39 Details BIB Download

- Pfeiffer, Max; Medrano, Samuel Navas; Auda, Jonas; Schneegass, Stefan: STOP! Enhancing Drone Gesture Interaction with Force Feedback. In: CHI'19 Proceedings. HAL, Glasgow, UK, 2019. PDF Details VolltextBIB Download

- Jonas Auda, Max Pascher; Schneegass, Stefan: Around the (Virtual) World - Infinite Walking in Virtual Reality Using Electrical Muscle Stimulation. In: Acm (Hrsg.): CHI'19 Proceedings. Glasgow, 2019. doi:10.1145/3290605.3300661KurzfassungPDF Details BIB Download

Virtual worlds are infinite environments in which the user can move around freely. When shifting from controller-based movement to regular walking as an input, the limitation of the real world also limits the virtual world. Tackling this challenge, we propose the use of electrical muscle stimulation to limit the necessary real-world space to create an unlimited walking experience. We thereby actuate the users‘ legs in a way that they deviate from their straight route and thus, walk in circles in the real world while still walking straight in the virtual world. We report on a study comparing this approach to vision shift – the state-of-the-art approach – as well as combining both approaches. The results show that particularly combining both approaches yield high potential to create an infinite walking experience.

- Faltaous, Sarah; Haas, Gabriel; Barrios, Liliana; Seiderer, Andreas; Rauh, Sebastian Felix; Chae, Han Joo; Schneegass, Stefan; Alt, Florian: BrainShare: A Glimpse of Social Interaction for Locked-in Syndrome Patients. In: CHI EA '19: Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems. ACM, New York, NY, USA, 2019. PDF Details BIB Download

- Schneegass, Stefan; Poguntke, Romina; Machulla, Tonja Katrin: Understanding the Impact of Information Representation on Willingness to Share Information. In: CHI '19: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. ACM, New YorkNYUnited States, 2019. doi:10.1145/3290605.3300753PDF Details BIB Download

- Sarah Faltaous, Jonathan Liebers; Yomna Abdelrahman, Florian Alt; Schneegass, Stefan: VPID: Towards Vein Pattern Identification Using Thermal Imaging. In: i-com (2019), Nr. 18 (3), S. 259-270. doi:10.1515/icom-2019-0009KurzfassungPDF Details BIB Download

Biometric authentication received considerable attention lately. The vein pattern on the back of the hand is a unique biometric that can be measured through thermal imaging. Detecting this pattern provides an implicit approach that can authenticate users while interacting. In this paper, we present the Vein-Identification system, called VPID. It consists of a vein pattern recognition pipeline and an authentication part. We implemented six different vein-based authentication approaches by combining thermal imaging and computer vision algorithms. Through a study, we show that the approaches achieve a low false-acceptance rate (“FAR”) and a low false-rejection rate (“FRR”). Our findings show that the best approach is the Hausdorff distance-difference applied in combination with a Convolutional Neural Networks (CNN) classification of stacked images.

- Pascher, Max; Schneegass, Stefan; Gerken, Jens: SwipeBuddy. In: Lamas, David; Loizides, Fernando; Nacke, Lennart; Petrie, Helen; Winckler, Marco; Zaphiris, Panayiotis (Hrsg.): Human-Computer Interaction -- INTERACT 2019. Springer International Publishing, Cham, 2019, S. 568-571. Kurzfassung Details BIB Download

Mobile devices are the core computing platform we use in our everyday life to communicate with friends, watch movies, or read books. For people with severe physical disabilities, such as tetraplegics, who cannot use their hands to operate such devices, these devices are barely usable. Tackling this challenge, we propose SwipeBuddy, a teleoperated robot allowing for touch interaction with a smartphone, tablet, or ebook-reader. The mobile device is mounted on top of the robot and can be teleoperated by a user through head motions and gestures controlling a stylus simulating touch input. Further, the user can control the position and orientation of the mobile device. We demonstrate the SwipeBuddy robot device and its different interaction capabilities.

- Antoun, Sara; Auda, Jonas; Schneegass, Stefan: SlidAR - Towards using AR in Education. In: MUM 2018: Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia. ACM, Cairo, Egypt, 2018. doi:10.1145/3282894.3289744PDF Details BIB Download

- Hoppe, Matthias; Knierim, Pascal; Kosch, Thomas; Funk, Markus; Futami, Lauren; Schneegass, Stefan; Henze, Niels; Schmidt, Albrecht; Machulla, Tonja: VRHapticDrones - Providing Haptics in Virtual Reality through Quadcopters. In: MUM 2018: Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia. ACM, Cairo, Egypt, 2018. doi:10.1145/3282894.3282898PDF Details BIB Download

- Saad, Alia; Chukwu, Michael; Schneegass, Stefan: Communicating Shoulder Surfing Attacks to Users. In: MUM 2018: Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia. ACM, Cairo, Egypt, 2018. doi:10.1145/3282894.3282919PDF Details BIB Download

- Schneegass, Christina; Terzimehić, Nađa; Nettah, Mariam; Schneegass, Stefan: Informing the Design of User - adaptive Mobile Language Learning Applications. In: MUM 2018: Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia. ACM, Cairo, Egypt, 2018. doi:10.1145/3282894.3282926PDF Details BIB Download

- Faltaous, Sarah; Elbolock, Alia; Talaat, Mostafa; Abdennadher, Slim; Schneegass, Stefan: Virtual Reality for Cultural Competences. In: MUM 2018: Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia. ACM, Cairo, Egypt, 2018. doi:10.1145/3282894.3289739PDF Details BIB Download

- Elagroudy, Passant; Abdelrahman, Yomna; Faltaous, Sarah; Schneegass, Stefan; Davis, Hilary: Workshop on Amplified and Memorable Food Interactions. In: MUM 2018: Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia. ACM, Cairo, Egypt, 2018. doi:10.1145/3282894.3286059PDF Details BIB Download

- Auda, Jonas; Hoppe, Matthias; Amiraslanov, Orkhan; Zhou, Bo; Knierim, Pascal; Schneegass, Stefan; Schmidt, Albrecht; Lukowicz, Paul: LYRA - smart wearable in-flight service assistant. In: ISWC '18: Proceedings of the 2018 ACM International Symposium on Wearable Computers. ACM, Singapore, Singapore, 2018. doi:10.1145/3267242.3267282PDF Details BIB Download

- Faltaous, Sarah; Baumann, M.; Schneegass, Stefan; Chuang, Lewis: Design Guidelines for Reliability Communication in Autonomous Vehicles. In: AutomotiveUI '18: Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications. ACM, Toronto, Canada, 2018. doi:10.1145/3239060.3239072PDF Details BIB Download

- Poguntke, Romina; Tasci, Cagri; Korhonen, Olli; Alt, Florian; Schneegass, Stefan: AVotar - exploring personalized avatars for mobile interaction with public displays. In: MobileHCI '18: Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct. ACM, Barcelona, Spain, 2018. doi:10.1145/3236112.3236113PDF Details BIB Download

- Weber, Dominik; Voit, Alexandra; Auda, Jonas; Schneegass, Stefan; Henze, Niels: Snooze! - investigating the user-defined deferral of mobile notifications. In: MobileHCI '18: Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services. ACM, Barcelona, Spain, 2018. doi:10.1145/3229434.3229436PDF Details BIB Download

- Poguntke, Romina; Kiss, Francisco; Kaplan, Ayhan; Schmidt, Albrecht; Schneegass, Stefan: RainSense - exploring the concept of a sense for weather awareness. In: MobileHCI '18: Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct. ACM, Barcelona, Spain, 2018. doi:10.1145/3236112.3236114PDF Details BIB Download

- Voit, Alexandra; Salm, Marie Olivia; Beljaars, Miriam; Kohn, Stefan; Schneegass, Stefan: Demo of a smart plant system as an exemplary smart home application supporting non-urgent notifications. In: NordiCHI '18: Proceedings of the 10th Nordic Conference on Human-Computer Interaction. ACM, Oslo, Norway, 2018. doi:10.1145/3240167.3240231PDF Details BIB Download

- Auda, Jonas; Schneegass, Stefan; Faltaous, Sarah: Control, Intervention, or Autonomy? Understanding the Future of SmartHome Interaction. In: Conference on Human Factors in Computing Systems (CHI). ACM, Montreal, Canada, 2018. PDF Details BIB Download

- Hassib, Mariam; Schneegass, Stefan; Henze, Niels; Schmidt, Albrecht; Alt, Florian: A Design Space for Audience Sensing and Feedback Systems. In: CHI EA '18: Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, Montreal, Canada, 2018. doi:10.1145/3170427.3188569PDF Details BIB Download

- Kiss, Francisco; Boldt, Robin; Pfleging, Bastian; Schneegass, Stefan: Navigation Systems for Motorcyclists: Exploring Wearable Tactile Feedback for Route Guidance in the Real World. In: CHI '18: Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, Montreal, Canada, 2018. doi:10.1145/3173574.3174191PDF Details BIB Download

- Voit, Alexandra; Pfähler, Ferdinand; Schneegass, Stefan: Posture Sleeve: Using Smart Textiles for Public Display Interactions. In: CHI EA '18: Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, Montreal, 2018. doi:10.1145/3170427.3188687PDF Details BIB Download

- Auda, Jonas; Weber, Dominik; Voit, Alexandra; Schneegass, Stefan: Understanding User Preferences towards Rule-based Notification Deferral. In: CHI EA '18: Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, Montreal, Canada, 2018. doi:10.1145/3170427.3188688PDF Details BIB Download

- Henze, Niels: Design and evaluation of a computer-actuated mouse. In: MUM '17: Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia. ACM, Stuttgart. Germany, 2017. doi:10.1145/3152832.3152862PDF Details BIB Download

- Hassib, Mariam; Khamis, Mohamed; Friedl, Susanne; Schneegass, Stefan; Alt, Florian: Brainatwork - logging cognitive engagement and tasks in the workplace using electroencephalography. In: MUM '17: Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia. ACM, Stuttgart, Germany, 2017. doi:10.1145/3152832.3152865PDF Details BIB Download

- Alexandra Voit, Stefan Schneegass: FabricID - using smart textiles to access wearable devices. In: MUM '17: Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia. ACM, Stuttgart, Germany, 2017. doi:10.1145/3152832.3156622PDF Details BIB Download

- Simon Mayer, Stefan Schneegass: IoT 2017 - the Seventh International Conference on the Internet of Things. In: IoT '17: Proceedings of the Seventh International Conference on the Internet of Things. ACM, Linz, Austria, 2017. doi:10.1145/3131542.3131543PDF Details BIB Download

- Duente, Im; Schneegass, Stefan; Pfeiffer, Max: EMS in HCI - challenges and opportunities in actuating human bodies. In: MobileHCI '17: Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services. ACM, Vienna, Austria, 2017. doi:10.1145/3098279.3119920PDF Details BIB Download

- Oberhuber, Sascha; Kothe, Tina; Schneegass, Stefan; Alt, Florian: Augmented Games - Exploring Design Opportunities in AR Settings With Children. In: IDC '17: Proceedings of the 2017 Conference on Interaction Design and Children. ACM, Stanford California, USA, 2017. doi:10.1145/3078072.3079734PDF Details BIB Download

- Knierim, Pascal; Kosch, Thomas; Schwind, Valentin; Funk, Markus; Kiss, Francisco; Schneegass, Stefan; Henze, Niels: Tactile Drones - Providing Immersive Tactile Feedback in Virtual Reality through Quadcopters. In: CHI EA '17: Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems. ACM, Denver Colorado, USA, 2017. doi:10.1145/3027063.3050426PDF Details BIB Download

- Schmidt, Albrecht; Schneegass, Stefan; Kunze, Kai; Rekimoto, Jun; Woo, Woontack: Workshop on Amplification and Augmentation of Human Perception. In: CHI EA '17: Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems. ACM, Denver Colorado, USA, 2017. doi:10.1145/3027063.3027088PDF Details BIB Download

- Mariam Hassib, Max Pfeiffer; Stefan Schneegass, Michael Rohs; Alt, Florian: Emotion Actuator - Embodied Emotional Feedback through Electroencephalography and Electrical Muscle Stimulation. In: Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI '17). ACM, New York, USA, 2017, S. 6133-6146. doi:10.1145/3025453.3025953KurzfassungPDF Details BIB Download

The human body reveals emotional and bodily states through measurable signals, such as body language and electroencephalography. However, such manifestations are difficult to communicate to others remotely. We propose EmotionActuator, a proof-of-concept system to investigate the transmission of emotional states in which the recipient performs emotional gestures to understand and interpret the state of the sender.We call this kind of communication embodied emotional feedback, and present a prototype implementation. To realize our concept we chose four emotional states: amused, sad, angry, and neutral. We designed EmotionActuator through a series of studies to assess emotional classification via EEG, and create an EMS gesture set by comparing composed gestures from the literature to sign-language gestures. Through a final study with the end-to-end prototype interviews revealed that participants like implicit sharing of emotions and find the embodied output to be immersive, but want to have control over shared emotions and with whom. This work contributes a proof of concept system and set of design recommendations for designing embodied emotional feedback systems.

- Alt, Florian: Stay Cool! Understanding Thermal Attacks on Mobile-based User Authentication. In: Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI '17). ACM, New York, USA, 2017, S. 3751-3763. doi:10.1145/3025453.3025461KurzfassungPDF Details BIB Download

PINs and patterns remain among the most widely used knowledge-based authentication schemes. As thermal cameras become ubiquitous and affordable, we foresee a new form of threat to user privacy on mobile devices. Thermal cameras allow performing thermal attacks, where heat traces, resulting from authentication, can be used to reconstruct passwords. In this work we investigate in details the viability of exploiting thermal imaging to infer PINs and patterns on mobile devices. We present a study (N=18) where we evaluated how properties of PINs and patterns influence their thermal attacks resistance. We found that thermal attacks are indeed viable on mobile devices; overlapping patterns significantly decrease successful thermal attack rate from 100% to 16.67%, while PINs remain vulnerable (>72% success rate) even with duplicate digits. We conclude by recommendations for users and designers of authentication schemes on how to resist thermal attacks.